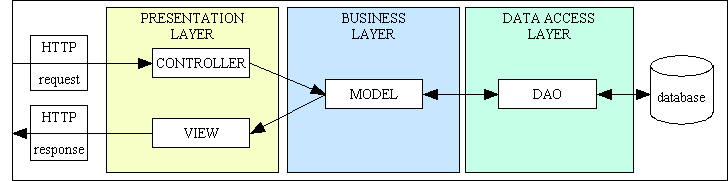

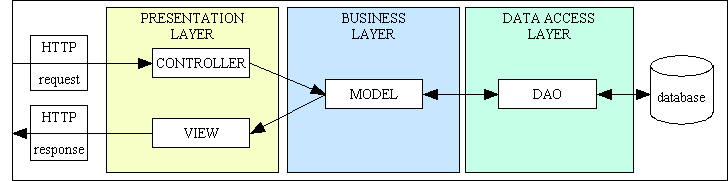

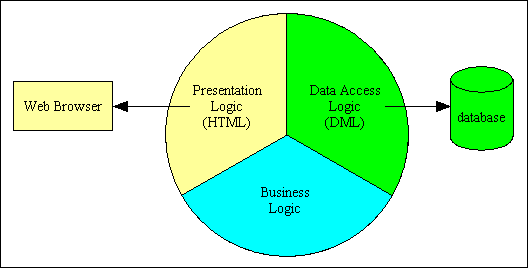

Figure 1 - MVC plus 3 Tier Architecture

The premise of the article SOLID OO Principles is that in order to be any "good" at OO programming or design you must follow the SOLID principles otherwise it will be highly unlikely that you will create a system which is maintainable and extensible over time. This to me is another glaring example of the Snake Oil pattern, or "OO programming according to the church of <enter your favourite religion here>". These principles are nothing but fake medicine being presented to the gullible as the universal cure-all. In particular the words "highly unlikely" lead me to the following observations:

Some of these principles may have merit in the minds of their authors, but to the rest of us they may be totally worthless. For example, some egotistical zealot invents the rule "Thou shalt not eat cheese on a Monday!" What happens if I ignore this rule? Does the world come to an end? Does the sun stop shining? Do the birds stop singing? Does the grass stop growing? Does my house burn down? Does my dog run away? Does my hair fall out? If I ignore this rule and nothing bad happens, then why am I "wrong"? If I follow this rule and nothing good happens, then why am I "right"?

It is possible to write programs which are maintainable and extensible WITHOUT following these principles, so following them is no guarantee of success, just as not following them is no guarantee of failure. Rather than being "solid" these principles are vague, wishy-washy, airy-fairy, and have very little substance at all. They are open to interpretation and therefore mis-interpretation and over-interpretation. Any competent programmer will be able to see through the smoke with very little effort.

If computer programming is supposed to be part of that discipline called Computer Science then I expect its rules and principles to be written with scientific precision. Sadly they are not. This lack of precision with the principles is therefore responsible for the corresponding lack of precision with their implementation. Less precision means more scope for mis-interpretation which in turn leads to more scope for errors.

My own development infrastructure is based on the 3 Tier Architecture, which means that it contains the following:

It also contains an implementation of the Model-View-Controller (MVC) design pattern, which means that it contains the following:

These two patterns, and the way they overlap, are shown in Figure 1:

Figure 1 - MVC plus 3 Tier Architecture

Please note that the 3 Tier Architecture and Model-View-Controller (MVC) design pattern are not the same thing.

The results of my approach clearly show the following:

Yet in spite of all this my critics (of which there are many) still insist that my implementation is wrong simply because it breaks the rules (or their interpretation of their chosen rules).

My primary criticism of each of the SOLID principles is that, like the whole idea of Object Oriented Programming, the basic rules may be complete in the mind of the original authors, but when examined by others they are open to vast amounts of interpretation and therefore mis-interpretation. These "others" fall roughly into one of two camps - the moderates and the extremists.

My secondary criticism of these principles is that they do not come with a "solid" reason for using them. If they are supposed to be a solution to a problem then I want to see the following:

If the only bad thing that happens if I choose to ignore any of these principles is that I offend someone's delicate sensibilities (Aw Diddums!), then I'm afraid that the principle does not have enough substance for me to bother with it, in which case I can consign it to the dustbin and not waste any of my valuable time on it.

It is also worth noting here that some of the problem/solution combinations I have come across on the interweb thingy are restricted to a particular language or a particular group of languages. PHP is a dynamically-typed scripted language, therefore does not have the problems encountered in a strongly-typed or compiled language. PHP may also achieve certain things in a different manner from other languages, therefore something which may be a problem in one of those other languages simply doesn't exist as a problem in PHP.

There is an old axiom in the engineering world which states "If it ain't broke then don't fix it". If I have code that works why should I change it so that it satisfies your idea of how it should be written? Refactoring code unnecessarily is often a good way to introduce new bugs into the system.

A similar saying is "If I don't have your problem then I don't need your solution". Too many so-called "experts" see an idea or design pattern that has benefits in a limited set of circumstances, so they instantly come up with a blanket rule that it should be implemented in all circumstances without further thought. If you are not prepared to think about what you are doing, and why, then how can you be sure that you are not introducing a problem instead of a solution.

Another old saying is Prevention is better than Cure. Sometimes a proposed solution does nothing more than mask the symptoms of the problem instead of actually curing it. For example, if your software structure is different from your database structure then the popular solution is to implement an Object Relational Mapper to deal with the differences. My solution would be totally different - eliminate the problem by not having incompatible structures in the first place!

Since a major motivation for object-oriented programming is software reuse it should follow that only those principles and practices which promote the production of reusable software should be eligible for nomination as "best". It should also follow that out of the many possible ways in which any one of these principles may be implemented, the only one which should be labelled as being the "best" should be the one that produces the "most" reusable software. There is no such thing as a single perfect or definitive implementation which must be duplicated as different programmers may devise different ways to achieve the desired objectives by using a combination of their own intellect, experience and the capabilities or restrictions of the programming language being used. Remember also that "best" is a comparative term which means "better than the alternatives", but far too many of today's dogmatists refuse to even acknowledge that any alternatives actually exist. If any alternatives are proposed their practitioners are automatically labelled as being heretics who should be ignored, or perhaps vilified, chastised, or even burned at the stake.

The reason that I do not follow a large number of these so-called "best practices" is not because I'm an anarchist, it is simply because I did not know that they existed. When they were pointed out to me years later I could instantly see that they would either add unnecessary code or reduce the amount of reusable code which I had already created, so I decided that they would be a waste of time and effort and so chose to ignore them.

The Single Responsibility Principle (SRP), also known as Separation of Concerns (SoC), states that an object should have only a single responsibility, and that responsibility should be entirely encapsulated by the class. All its services should be narrowly aligned with that responsibility. But what is this thing called "responsibility" or "concern"? How do you know when a class has too many and should be split? When you start the splitting process, how do you know when to stop?

In his original paper Robert C. Martin (Uncle Bob) wrote the following:

This principle was described in the work of Tom DeMarco and Meilir Page-Jones. They called it cohesion. They defined cohesion as the functional relatedness of the elements of a module. In this chapter we'll shift that meaning a bit, and relate cohesion to the forces that cause a module, or a class, to change.

SRP: The Single Responsibility PrincipleA CLASS SHOULD HAVE ONLY ONE REASON TO CHANGE.

SRP was supposed to be based on something called cohesion which was defined as "functional relatedness", but instead it was converted to "reason to change" which caused no end of confusion and mis-interpretation. Instead of "shifting the meaning a bit" what he actually did was mangle it beyond recognition.

In his article Test Induced Design Damage? Uncle Bob tried to redefine his original description to make it less ambiguous:

How do you separate concerns? You separate behaviors that change at different times for different reasons. Things that change together you keep together. Things that change apart you keep apart.

GUIs change at a very different rate, and for very different reasons, than business rules. Database schemas change for very different reasons, and at very different rates than business rules. Keeping these concerns [GUI, business rules, database] separate is good design.

Did you notice that in his article on SRP he asked the question How do you separate concerns?

This means that "concern" and "responsibility" mean exactly the same thing, that "concerned with" and "responsible for" mean exactly the same thing.

If you take a look at Figure 1 you will see that the GUI is handled in the Presentation layer, business rules are handled in the Business layer, and database access is handled in the Data Access layer. This conforms to Uncle Bob's description, so how can it possibly be wrong?

In a later article called The Single Responsibility Principle Uncle Bob tried to answer the following question:

What defines a reason to change?

Some folks have wondered whether a bug-fix qualifies as a reason to change. Others have wondered whether refactorings are reasons to change.

The only sensible answer to this question which I found in his article was:

This is the reason we do not put SQL in JSPs. This is the reason we do not generate HTML in the modules that compute results. This is the reason that business rules should not know the database schema. This is the reason we separate concerns.

Did you notice that YET AGAIN in his article on SRP he states This is the reason that we separate concerns

?

What Uncle Bob is describing here is the 3-Tier Architecture which has three separate layers - the Presentation layer, the Business layer and the Data Access layer - and which I have implemented in my framework. This architecture also has its own set of rules which I have followed to the letter. So if I have split my application into the three separate layers which were identified by Uncle Bob then who are you to tell me that I am wrong?

Later on in the same article Uncle Bob also says the following:

Another wording for the Single Responsibility Principle is:Gather together the things that change for the same reasons. Separate those things that change for different reasons.

If you think about this you'll realize that this is just another way to define cohesion and coupling. We want to increase the cohesion between things that change for the same reasons, and we want to decrease the coupling between those things that change for different reasons.

Unfortunately there are some people out there who skip over the bit where Uncle Bob identifies the three areas of responsibility which should be separated - GUI, business rules and database - and instead focus on the term "reason to change". By focusing on the wrong term, then applying over-enthusiastic or even perverse interpretations of this term, this causes them to go far, far beyond the three areas identified by Uncle Bob, and I'm afraid that this is where they and I part company.

In Robert C. Martin's book Agile Principles, Patterns, and Practices in C# he wrote about the Single Responsibility Principle in which he states the following:

A better design is to separate the two responsibilities (computation and GUI) into two completely different classes as shown in Figure 8-2.

...

Figure 8-4 shows a common violation of the SRP. The Employee class contains business rules and persistence control. These two responsibilities should almost never be mixed. Business rules tend to change frequently, and though persistence may not change as frequently, it changes for completely different reasons. Binding business rules to the persistence subsystem is asking for trouble.

It is quite clear to me that he is saying that GUI logic, business logic and database (persistence) logic are separate responsibilities which have different reasons to change therefore should each be in their own class.

Martin Fowler also describes this separation into three layers in his article PresentationDomainDataLayering where he refers to the "Business" layer as the "Domain" layer, but then he goes and screws it up by introducing a Service layer and a Data Mapper layer which I consider to be both useless and unnecessary. My code works perfectly well without them, so they can't be that important. However, in his article AnemicDomainModel Martin Fowler says the following:

It's also worth emphasizing that putting behavior into the domain objects should not contradict the solid approach of using layering to separate domain logic from such things as persistence and presentation responsibilities. The logic that should be in a domain object is domain logic - validations, calculations, business rules - whatever you like to call it.

Here you can see that splitting an application into three areas of responsibility - presentation logic, domain logic and persistence logic - is a perfectly acceptable approach. All I have done is extend it slightly by splitting the Presentation layer into two, thus providing me with a View and Controller which then conforms to the MVC design pattern.

The idea that the 3-Tier Architecture and separation of responsibilities are intertwined was documented by Craig Larman in his book, first published in 1997, called Applying UML and Patterns in which, when describing the three-tier architecture, he said the following:

One common architecture for information systems that includes a user interface and persistent storage of data is known as the three-tier architecture. A classic description of the vertical tiers is:

- Presentation - windows, reports, and so on.

- Application Logic - tasks and rules which govern the process.

- Storage - persistent storage mechanism.

The singular quality of a three-tier architecture is the separation of the application logic into a distinct logical middle tier of software. The presentation tier is relatively free of application processing; windows forward task requests to the middle tier. The middle tier communicates with the back-end storage layer.

This architecture is in contrast to a two-tier design, in which, for example, application logic is placed within window definitions, which read and write directly to a database; there is no middle tier that separates out the application logic. A disadvantage of a two-tier design is the inability to represent application logic in separate components, which inhibits software reuse. It is also not possible to distribute application logic to a separate computer.

A recommended multi-tier architecture for object-oriented information systems includes the separation of responsibilities implied by the classic three-tier architecture. These responsibilities are assigned to software objects.

Note here that Craig Larman linked the three-tier architecture and the separation of responsibilities way back in 1997, which is several years before Robert C. Martin published his Single Responsibility Principle in 2003.

Using the 3-Tier Architecture has been of great benefit in the development of my framework and the enterprise applications which I have created using it. That is why if I want to switch the DBMS from MySQL to something else like PostgreSQL, Oracle or SQL Server I need only change the component in the Data Access layer. If I want to change the output from HTML to something else like PDF, CSV or XML I need only change the component in the Presentation layer. Each component in the Business layer handles the data for a single business entity (database table), so this only changes if the table's structure, data validation rules or business rules change. The Business layer is not affected by a change in the DBMS engine, nor a change in the way its data is presented to the user.

So if my implementation follows the descriptions provided by Uncle Bob, who invented the term, and Martin Fowler, who is the author of Patterns of Enterprise Application Architecture and Craig Larman in his book Applying UML and Patterns, then who the hell are you to tell me that I am wrong?

If you read what Uncle Bob wrote you would also see the following caveat:

If, on the other hand, the application is not changing in ways that cause the two responsibilities to change at different times, then there is no need to separate them. Indeed, separating them would smell of Needless Complexity.

There is a corollary here. An axis of change is only an axis of change if the changes actually occur. It is not wise to apply the SRP, or any other principle for that matter, if there is no symptom.

Another way of saying that last sentence is:

If you don't have the problem for which a solution was devised, then you don't need that solution.

What he is saying here is that you should not go too far by putting every perceived responsibility in its own class otherwise you may end up with code that is more complex than it need be. You have to strike a balance between coupling and cohesion, and this requires intelligent thought and not the blind application of an academic theory. For example, the domain object (the Model in MVC, or the Business layer in 3TA) should contain only business rules (note the plural) and not any presentation or database logic, but some people go too far and treat each individual business rule as a separate "reason for change" and demand that each one be put in its own class. This has the effect of replacing cohesion with fragmentation.

In his original article Uncle Bob stated the following:

A class should have one and only one reason to change

But what is a "reason to change" and how is it related to "responsibility"? If a class contained several of these "reasons" it violated SRP, so to demonstrate conformance the programmer had to isolate each of these "reasons" and put them into separate classes so that each class then had a single "reason". When I first read this document I thought WTF! because that description was so vague and meaningless it could mean virtually anything. And so it came to pass. Some folks have wondered whether a bug-fix qualifies as a reason to change. Others have wondered whether refactorings are reasons to change. Others come up with definitions which are even stranger. For example, in this post I described how I would classify the methods in my abstract table class using these words:

It is an abstract class which contains all the methods which may be used when a Controller calls a Model. The methods fall into one of the following categories:

- Methods which allow it to be called by the layer above it (the Controller or the View)

- Methods which allow it to call the layer below it (the Data Access Object).

- Methods which sit between these two. Many of these are "customisable" methods which are empty, but could be filled with code in order to override the default behaviour.

In this post he responded with:

Wouldn't you say these are 3 different responsibilities?

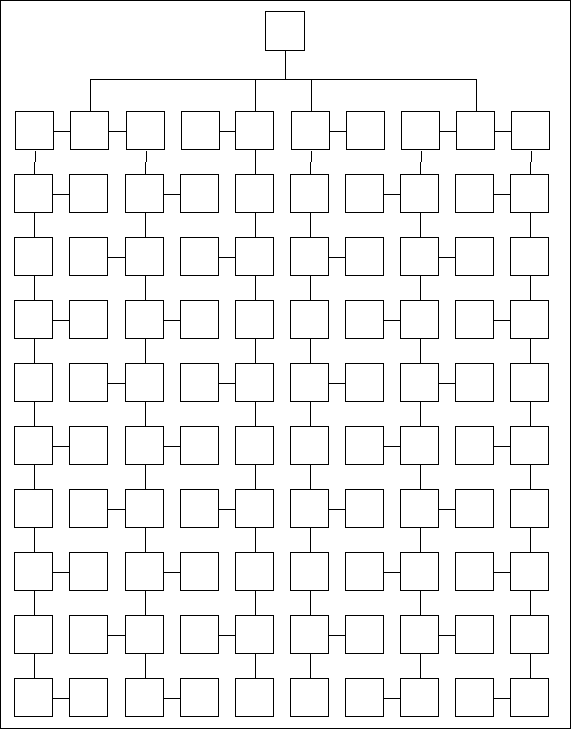

Here he is saying that those three categories represent a different "reason to change" therefore, according to his logic, the methods which fall into each of those categories should be separated out and moved to a separate class which then has methods which fall into only one of those categories. For example, Figure 2 shows one of my offending classes which causes people like him to foam at the mouth.

Figure 2 - Reason For Change - Before

To make it simple for the intellectually challenged I have given each of those three categories its own colour:

If I were to take the methods in each of those categories and put them into separate classes I would end up with the structure shown in Figure 3.

Figure 3 - Reason For Change - After

If you are awake you should see the flaw in this argument. In order for this new structure to work I have to add new sets of methods into each of those classes:

This then means that each of those new classes contains methods which fall into more that one of those three categories, so they need to be split again. These new classes still suffer from that problem, so you will have to split them again, ... and again, ... and again, ad infinitum in a perpetual loop. Do you still think that this is a good idea?

In his article The Single Responsibility Principle which Uncle Bob published in 2014 he wrote the following:

And this gets to the crux of the Single Responsibility Principle. This principle is about people.When you write a software module, you want to make sure that when changes are requested, those changes can only originate from a single person, or rather, a single tightly coupled group of people representing a single narrowly defined business function. You want to isolate your modules from the complexities of the organization as a whole, and design your systems such that each module is responsible (responds to) the needs of just that one business function.

Why? Because we don't want to get the COO fired because we made a change requested by the CTO.

I have been involved with building applications, both bespoke and packages, for four decades, and the rules for requesting changes are always the same - they have to be documented, then put through an impact analysis to determine the scope of the change, what it is supposed the affect, how long it will take and how much it will cost. This should also include a test plan which shows that the amended code is producing the expected results. The Request For Change (RFC) MUST always come from an authorised individual or group of individuals, and it is up to that individual or group to ensure that the request is acceptable to all users of the system. This means that if the COO gets fired because he requested a change that upset the CTO then my only response will be "Oh dear. What a pity. How sad."

The wikipedia page contains the following statement:

A module should be responsible to one, and only one, actor. The term actor refers to a group (consisting of one or more stakeholders or users) that requires a change in the module.

This is complete and utter nonsense. When I am writing a piece of software I do not care who requested it or who will be using it, all I care about is writing code that meets the documented requirements. In large applications which can be accessed by many people it is true that not every person will have access to every program within that application, that has absolutely zero effect of how that program is written. A program does what it does regardless of who is running it. Control over who can or cannot access a particular program is not something which is handled within the program, it is (or should be) handled by a separate piece of software known as an Access Control List (ACL) or Role Based Access Control (RBAC) system. The RADICORE framework has its own inbuilt RBAC system which automatically filters out from any menu options any program to which the current user has not be granted access. If they canny see an option they cannot run it.

Believe it or not there are some numpties out there who think that The Single Responsibility Principle (SRP) and Separation of Concerns (SoC) do NOT mean the same thing. The logic they use goes something like this:

SoC's scope is at a higher framework/application level. Like...separation of concerns between areas of the framework and application to split them up in logical parts, so they are more modular, reusable and easier to change.

SRP's scope is at the lower class level. It describes how classes should be formed, so they are also more modular, reusable and easier to change.

The goals of both concepts are the same, however, you can't always say you have successfully complied with SoC and it directly means you have properly done SRP too.

If they are different, then why don't they both appear in SOLID?

How can I possibly say that they are the same? Answer: by reading what Robert C. Martin actually wrote. If you look at his articles on SRP which I have quoted above you will see statements such as How do you separate concerns?

and This is the reason we separate concerns

. If he refers to separation of concerns

in his articles on SRP then how is it possible for anyone to say that they are totally different principles? Furthermore, in the article The Single Responsibility Principle he includes the following references to other principles which existed at the time:

In 1974 Edsger Dijkstra wrote another classic paper entitled On the role of scientific thought (PDF). in which he introduced the term: The Separation of Concerns.

During the 1970s and 1980s the notions of Coupling and Cohesion were introduced by Larry Constantine, and amplified by Tom DeMarco, Meilir Page-Jones and many others.

In the late 1990s I tried to consolidate these notions into a principle, which I called: The Single Responsibility Principle.

It is quite clear to me that these notions in the 3rd paragraph is a consolidation of all the notions mentioned in the previous 2 paragraphs. In other words

SoC + Coupling + Cohesion = SRP

To me this means that both SoC and SRP are different ways of achieving high cohesion.

This opinion is also supported in the article The Art of Separation of Concerns which contains statements such as the following:

The Principle of Separation of Concerns states that system elements should have exclusivity and singularity of purpose. That is to say, no element should share in the responsibilities of another or encompass unrelated responsibilities.Separation of concerns is achieved by the establishment of boundaries. A boundary is any logical or physical constraint which delineates a given set of responsibilities. Some examples of boundaries would include the use of methods, objects, components, and services to define core behavior within an application; projects, solutions, and folder hierarchies for source organization; application layers and tiers for processing organization; and versioned libraries and installers for product release organization.

Though the process of achieving separation of concerns often involves the division of a set of responsibilities, the goal is not to reduce a system into its indivisible parts, but to organize the system into elements of non-repeating sets of cohesive responsibilities. As Albert Einstein stated, "Make everything as simple as possible, but not simpler."

In his article the author switches between the words "concern" and "responsibility" multiple times without giving any indication that he thinks they have different meanings. On the contrary, he clearly demonstrates that the two words are interchangeable and that SoC and SRP are nothing more than saying the same thing using different words. I have yet to see any article by a reputable authority which states that these two principles are different and must be performed separately and independently.

People who understand the English language should know that sometimes there are different words which have the same meaning and sometimes there is a word which can have a different meaning depending on the context. When it comes to giving a label to a block of code which describes what it does both of these sentences mean the same thing:

Some people like to say that there is a difference simply because SoC uses the plural while SRP uses the singular. Let me dissect and destroy that argument. You start with a monolithic piece of code which contains a mixture of GUI logic, business logic and database logic. Uncle Bob has already identified these three types of logic as being a separate concern/responsibility. You then split that code which deals with multiple concerns/responsibilities into smaller modules each of which then deals with only a single concern/responsibility. You start off with a single "something" that does multiple (plural) "concerns/responsibilities" and you split/separate it to produce multiple (plural) "somethings" each of which handles a single "concern/responsibility". The use of singular and plural does not constitute a totally different concept as they are two sides of the same coin. From one point of view you are starting with a singular and ending with a plural while from the other point of view you are starting with a plural and ending with several singulars. The end result is exactly the same, so saying that the description which transforms a singular into the plural is different from the description which transforms a plural into several singulars IMHO cannot possibly be regarded as a rational argument.

I have already established that SRP and the 3-Tier Architecture are the same, and if you read this description of Separation of concerns you will see the following sentence:

Layered designs in information systems are another embodiment of separation of concerns (e.g., presentation layer, business logic layer, data access layer, persistence layer).

Is that or is it not a description of the N-Tier Architecture? If the results of applying these two principles are the same then how is it possible to say that they are different?

Other ridiculous claims include the following:

Believe it or not there are some numpties out there who think that if an entity has business rules (note the plural) that each rule should be in its own class. They say that each rule has a separate "reason for change" which is the trigger point in Uncle Bob's original description of SRP. In his follow-up articles he expressly identified three and only three concerns which he considers as candidates for separation - GUI, business rules, and database. He did not say that this was just the start, that further separation was needed, so it is reasonable to assume that everything in each of those three areas can be treated as a single concern which can be encapsulated into its own object.

This assumption/opinion was reinforced by what Martin Fowler wrote in AnemicDomainModel:

It's also worth emphasizing that putting behavior into the domain objects should not contradict the solid approach of using layering to separate domain logic from such things as persistence and presentation responsibilities. The logic that should be in a domain object is domain logic - validations, calculations, business rules - whatever you like to call it.

Note that he says a domain object (singular) and validations, calculations, business rules (plural). In the same article he quotes from the book Domain-Drive Design by Eric Evans where he says the following:

Domain Layer (or Model Layer): Responsible for representing concepts of the business, information about the business situation, and business rules. State that reflects the business situation is controlled and used here, even though the technical details of storing it are delegated to the infrastructure. This layer is the heart of business software.

The wikipedia article on Separation of Concerns contains a reference to The Art of Separation of Concerns which says the following

The Principle of Separation of Concerns states that system elements should have exclusivity and singularity of purpose. That is to say, no element should share in the responsibilities of another or encompass unrelated responsibilities. Separation of concerns is achieved by the establishment of boundaries. A boundary is any logical or physical constraint which delineates a given set of responsibilities.

In that article it uses the word "responsibility" over 18 times, so in that author's mind there is NO distinction between "concern" and "responsibility". The two words mean the same thing, they are interchangeable, they are NOT different. I actually posted a question to that article in which I specifically asked if "concern" and "responsibility" meant the same thing or were different, and if Separation of Concerns meant the same thing as Single Responsibility Principle, and this was his reply:

A responsibility is another way of referring to a concern. The Single Responsibility Principle is a class-level design principle that pertains to restricting the number of responsibilities a given class has.

It is quite clear to me that a Domain/Business/Model object should therefore contain ALL the relevant information for that object. To split it into smaller classes would therefore violate encapsulation and eliminate high cohesion, thus reducing the quality of the application.

The idea behind this principle is that you can take a huge monolithic piece of code and break it down into smaller self-contained modules. This means that you must start with something big and then split it into smaller units. What is the biggest thing in an application? Why the whole application, of course. Nobody who is sane would suggest that you put the entire application into a single class, just as you would not put all of your data into a single table in your database, so the application should be split into smaller units, but what are these "smaller units"? A transaction processing application is comprised of a number of different components which are known as user transactions which can be selected from a menu to allow a user to complete a task. In a database application each component performs one or more operations on one or more database tables, and there may be multiple components performing the same set of operations on different tables. These components are usually developed one at a time and different developers should be able to work on different components at the same time without treading on each other's toes.

In time an application can grow to have hundreds or even thousands of user transactions, so the starting point for this splitting process should be the user transaction. Every user transaction has code which deals with the user interface, business rules and database access, and it is considered "good design" if the code for each of these areas is separated out into its own module. A beneficial consequence of this process could be that some of these modules end up by being sharable among several or even many user transactions, so you could end up with more reusable code yet less code overall. For example, in my own development environment I have implemented a combination of the 3-Tier Architecture and MVC design pattern which allows me to have the following reusable components:

Although the splitting process may appear to do nothing but increase the number of components, if you can make some of those components sharable and reusable you may actually be able to reduce the total number of components overall.

Be aware that if you take this splitting process too far then instead of a modular system comprised of cohesive units you will end up with a fragmented system where all unity is destroyed. A system which contains a huge number of tiny fragments will have increased coupling and decreased readability when compared with a system which contains a smaller number of cohesive and unified modules.

Some more thoughts on this topic can be found at:

As far as I am concerned the following practices are genuine violations of SRP:

If you build a user transaction where all the code is contained within a single script you have an example of the architecture shown in Figure 4:

Figure 4 - The 1-Tier Architecture

This is a classic example of a monolithic program which does everything, and which is difficult to change. In the OO world an object which tries to do everything is known as a "God" object. This means that you cannot make a change in one of those areas without having to change the whole component. It is only by splitting that code into separate components, where each component is responsible for only one of those areas, will you have a modular system with reusable and interchangeable modules. This satisfies Robert C. Martin's description as it allows you to make a change in any one of those layers without affecting any of the others. You can take this separation a step further by splitting the Presentation layer into separate components for the View and Controller, as shown in Figure 1.

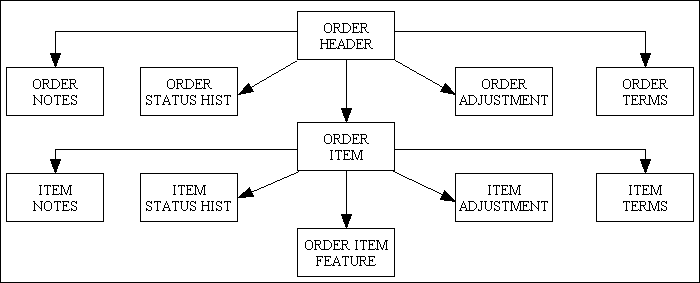

Another problem I have encountered quite often in other people's designs is deciding on the size and scope of objects in the Business layer (or Model in the MVC design pattern). As I design and build nothing but database applications my natural inclination is to create a separate class for each database table, but I have been told on more than one occasion that this is not good OO. This means that the "proper" approach, according to those people who consider themselves to be experts in such matters, is to create compound objects which deal with multiple database tables. The structure shown in Figure 5 identifies a single Sales Order object which has data spread across several tables in the database:

Figure 5 - Single object dealing with multiple tables

Far too many OO programmers seem to think that the concept of an "order" requires a single class which encompasses all of the data even when that data is split across several database tables. What they totally fail to take into consideration is that it will be necessary, within the application, to write to or read from tables individually rather than collectively. Each database table should really be considered as a separate entity in its own right by virtue of the fact that it has its own properties and its own validation rules, with the only similarity being that it shares the same CRUD operations as all the other tables. The compound class will therefore require separate methods for each table within the collection, and these method names must contain the name of the table on which they operate and the operation which is to be performed. This in turn means that there must be special controllers which reference these unique method names, which in turn means that the controller(s) are tightly coupled to this single compound class. As tight coupling is supposed to be a bad thing, how can this structure be justified?

When splitting a large piece of code into smaller units you are supposed to strike a balance between cohesion and coupling. Too much of one could lead to too little of the other. This is what Tom DeMarco wrote in his book Structured Analysis and System Specification:

Cohesion is a measure of the strength of association of the elements inside a module. A highly cohesive module is a collection of statements and data items that should be treated as a whole because they are so closely related. Any attempt to divide them would only result in increased coupling and decreased readability.

Too many people take the idea that a "single responsibility" means "to do one thing, and only one thing" instead of "have a single reason to change", so instead of creating a modular system (containing highly cohesive units) they end up with a totally fragmented system (with all unity destroyed) in which each class has only one method (well, if it has more than one method then it must be doing more than one thing, right?) and each method only has one line of code (well, if it has more than one line of code then it must be doing more than one thing, right?). This, in my humble opinion, is a totally perverse interpretation of SRP and results in ravioli code, a mass of tiny classes which end up by being less readable, less usable, less efficient, less testable and less maintainable. This is like having an ant colony with a huge number of workers where each worker does something different. When you look at this mass of ants, how do you decide who does what? Where do you look to find the source of a bug, or where to make a change?

This ridiculous idea was promoted by Robert C. Martin's article One thing: extract until you drop which has been totally misinterpreted by legions of gullible programmers.

A prime example of this is a certain open source email library which uses 100 classes, each containing just a single method, as shown in Figure 6:

Figure 6 - Too much separation

This appalling design is made possible by constructing some classes which contain a single method, and having some methods which contain a single line of code. But who in their right minds would create 100 classes just to send an email? WTF!!! Who in their right minds would make this mess even bigger by spreading this collection of micro-classes across a collection of subdirectories? WTF!!! When I see designs like this the following questions come to mind:

In order for a programmer to do his job efficiently he needs to be able to picture the entire structure of the program in his head so that he understands the processing flow, which will then give him an idea where to look in the code for a particular piece of logic. As far as I am concerned the structure shown in Figure 6 is as useless as a disassembled jigsaw puzzle when compared with that shown in Figure 1 above.

In his article Pragmatic OOP the author Ricki Sickenger said the following:

When wanting to keep things simple you should try to let the code flow in a linear fashion. Any related logic code should be kept as close as possible, even if it means using conditional statements. It is a lot easier to read code if most of the important bits are in the same object or file instead of spread out between multiple classes. You should of course use inheritance, but moderately and when necessary. You should also abstract out logic into appropriately named methods to keep a readable level of abstraction. ... You want to maximize readability because that is what matters later on when you are maintaining or debugging. That is the pragmatic approach.

No one is impressed by how OO your code is if it is impossible to debug.

I recently had to add to my ERP application the ability to send SMS messages. As anyone who has done the same will tell you this ability is provided as a service by a third party. You send a web service request to a URL provided by the service provider, and they in turn send an SMS message to the specified mobile device. This is usually a paid-for service, so you have to sign up and obtain an account before you can use it. The service provider I chose allows you to create a test account with an amount of free credits so that you can test it out on up to 10 designated mobile numbers. It has a test page where you can send a test message to one of these numbers, and on the same page it has a button where you can request a sample of the code used to send that message. Code samples are available in a variety of different languages, and the code for PHP showed a single block of 20+ lines of code as follows:

<?php

$curl = curl_init();

curl_setopt_array($curl, array(

CURLOPT_URL => "https://rest-api.telesign.com/v1/messaging",

CURLOPT_RETURNTRANSFER => true,

CURLOPT_ENCODING => "",

CURLOPT_MAXREDIRS => 10,

CURLOPT_TIMEOUT => 30,

CURLOPT_HTTP_VERSION => CURL_HTTP_VERSION_1_1,

CURLOPT_CUSTOMREQUEST => "POST",

CURLOPT_POSTFIELDS => "message=Your%20Tracy%20code%20is%20123456&message_type=OTP&phone_number=%2B447775715914",

CURLOPT_HTTPHEADER => array(

"authorization: Basic << enter account details here >>",

"content-type: application/x-www-form-urlencoded"

),

));

$response = curl_exec($curl);

$err = curl_error($curl);

curl_close($curl);

if ($err) {

echo "cURL Error #:" . $err;

} else {

echo $response;

}

I also discovered that instead of writing these 20 lines of code myself I could download a composer package and this would do everything for me, so I decided to give it a try. What could possibly go wrong? Plenty, is the answer.

In the first place the code didn't work. Instead of sending a message it came back with a cURL error because one of the options it set was wrong. I tried to find the code where it set these options, but I could not as it was spread out over multiple classes. I decided to step through the code with my debugger, and this is where I got the shock of my life! I stepped all the way through to the point where the cURL error was being returned, and I still had no clue where the faulty option was being set. At that point I counted the number of classes which had been loaded into my IDE, and was shocked to see that it was over 30. I had also noticed that it had to step through several lines of code in the autoloader so that it could locate, load and instantiate each new class before it could be used. Some of these classes were from vendors other than the SMS provider, and some of these classes provided functionality that I did not need.

I tried the single block of code provided by the service provider, and it worked. I tried the alternative composer package, supposedly written by OO experts using the "proper" techniques (whatever they are), and it failed. I tried to debug and fix this code, but I got so lost in the maze of multiple classes, jumping from one method to another just to execute a single line of code, that I couldn't remember where I had come from nor where I was going. What an unmaintainable pile of pooh!

Being an ardent fan of the KISS and Do The Simplest Thing That Could Possibly Work principles I decided that a small block of code that worked was much better than the over-engineered piece of excrement which did not. Anyone who tells me that "proper" code which uses a large number of small classes is better than a small number of large classes should be prepared for a rude response.

The problem caused by too much separation has been noticed by others. In his article Avoiding Object Oriented Overkill the author Brandon Savage makes the following statement:

The concept that large tasks are broken into smaller, more manageable objects is fundamental. Breaking large tasks into smaller objects works, because it improves maintainability and modularity. It encourages reuse. When done correctly, it reduces code duplication. But once it's learned, do we ever learn when and where to stop abstracting? Too often, I come across code that is so abstracted it's nearly impossible to follow or understand.

The confusion over the idea that "responsibility" should be treated as "reason for change" is discussed in I don't love the single responsibility principle In which Marco Cecconi says the following:

The purpose of classes is to organize code as to minimize complexity. Therefore, classes should be:By default, choose to group by functionality.

- small enough to lower coupling, but

- large enough to maximize cohesion.

He also points out that an over-enthusiastic implementation of SRP can result in large numbers of anemic micro-classes that do little and complicate the organisation of the code base.

In his article SRP is a Hoax the author says that the idea of "one reason to change" is a misrepresentation of the original concept of "high cohesion":

My point is that SRP is a wrong idea.

Making classes small and cohesive is a good idea, but making them responsible "for one thing" is a misleading simplification of a "high cohesion" concept. It only turns them into dumb carriers of something else, instead of being encapsulators and decorators of smaller entities, to construct bigger ones.

In our fight for this fake SRP idea we lose a much more important principle, which really is about true object-oriented programming and thinking: encapsulation. It is much less important how many things an object is responsible for than how tightly it protects the entities it encapsulates. A monster object with a hundred methods is much less of a problem than a DTO with five pairs of getters and setters! This is because a DTO spreads the problem all over the code, where we can't even find it, while the monster object is always right in front of us and we can always refactor it into smaller pieces.

Encapsulation comes first, size goes next, if ever.

In his article Thinking in abstractions the author Mashooq Badar wrote the following regarding the impact of too many abstractions (i.e. too many small classes):

If comprehension requires digging from one level of an abstraction to the ones below then the abstraction is actually getting in the way because every jump requires the reader to keep in mind the previous level(s). For example I often see a liberal use of "extract method/class/module", in what may be considered "clean code", to the point that I find myself constantly diving in and out of different levels of abstractions in order to comprehend a simple execution flow. Composed Methods are great but at the same time a liberal use of method/class/module extraction without close attention to pros and cons of each abstraction is not so clever.

The phrase constantly diving in and out of different levels of abstractions in order to comprehend a simple execution flow

sounds a lot like the Yo-Yo Problem to me.

Uncle Bob also wrote an article called One Thing: Extract till you Drop in which he advocated that you should extract all the different actions out of a function or method until it is physically impossible to extract anything else. In extreme examples this would mean transforming a single function that contain 10 lines of code into 10 functions each containing a single line of code, but to my mind that would be too extreme. Even worse is the idea that each of those functions should be put in its own class. Even worse than that is the idea is that each class should be put into a subdirectory named after its calling object. While this may sound good as an academic exercise, is it a worthwhile in the real world? I am not the only one who thinks that this idea is excessive, as shown by other people's reactions to his article:

A function by definition, returns 1 result from 1 input. If there's no reuse, there is no "should". Decomposition is for reuse, not just to decompose. Depending on the language/compiler there may be additional decision weights.

What I see from the example is you've gone and polluted your namespace with increasingly complex, longer, more obscure, function name mangling which could have been achieved (quick and readable) with whitespace and comments.

When we write code, we group together elements that are strongly associated (the cohesion/coupling principle). Functions & methods are a way of grouping such elements and providing an image (method name). Saying that a function should do one thing is the same as identifying it as a chunk. However, using the indirection of a function has time and capacity penalties for our limited cognitive process. We can use statement structure and comments to identify chunks and provide images rather than revert to formal functions (and the associated indirection cost). I would envisage that each person's view of the most appropriate level of extraction is different and probably primarily dependent upon programming expertise.

Another view on this topic can be found at Refactoring: Extract a Method, When It's Meaningful.

The idea that "extract till you drop" means splitting a class into a large number of smaller classes shows a total misunderstanding of what Uncle Bob wrote. If you look at his SymbolReplacer class before and after his refactoring you should see that all that has changed is the number of methods within that single class. Nowhere does he suggest that each of those sub-methods should be put in its own class, and nowhere does he suggest that each of those new classes be put into its own directory. This is an example of the pure fabrication anti-pattern as it is an artificial construct which serves no useful purpose. The perpetrators of this stupid idea should hang their heads in shame.

Saying that a class or function should be responsible for only one thing may sound reasonable to the uninitiated, but what is this mystical "one thing"? Once you start this process of separation when do you stop? When do you say "enough is enough"? When do you say "that much and no more"? Unfortunately there are far too many developers out there who cannot recognise the point at which "enough" starts becoming "too much", so they produce abominations such as that shown in Too much separation: Example 1. Putting each line of code into its own method and each method into its own class and each class into its own directory may sound like a good idea in theory, but in practice it is a maintenance nightmare. Following the path through a single block of code containing 100 lines is far easier than jumping through 100 method calls in 100 classes in 100 directories.

It is a question of balance, so you must have the ability to detect when the scales are starting to go in the wrong direction. So how do you detect this cut-off point? I will demonstrate with an example. Suppose I start with a method containing 1,000 lines of code. What do I do first?

Keeping these concerns (GUI, business rules, database) separate is good design.

This means that if I had a method containing 100 lines of code then I would *NOT* split it into smaller units unless I had good reason to. I'm afraid that not being able to count above 10 without having to take my shoes and socks off is *NOT* a good enough reason. As far as I am concerned the principles of encapsulation and cohesion take precedence over every other rule that has been invented. That is why I create create a single class for each entity or service, and regardless of how many different steps each class may contain I will not separate out any of those steps into a separate class unless I have a very good reason, such as it needs to be used in other classes.

Some people try to justify this excessive proliferation of classes by inventing a totally artificial rule which says "No method should have more than X lines, and no class should have more than Y methods". What these dunderheads fail to realise is that such a rule completely violates the principle of encapsulation which states that ALL the properties and ALL the methods for an object should be assembled into a SINGLE class

. This means that splitting off an object's properties into separate classes, or an object's methods into separate classes, is a clear violation of this fundamental principle. It also has the effect of violating the principle of cohesion by breaking a highly cohesive module which contains a set of closely related functions into a collection of small and less cohesive fragments. This results in increased coupling (by increasing the number of inter-module calls) and decreased readability, and therefore should be avoided. It also violates the notion of information expert which is part of the GRASP principles.

The idea that SRP is based on the the ability to count lines of code rather than the separation of multiple responsibilities can only come from people who read a principle then pass on their own description using a different set of words. This mis-translation can then lead to mis-interpretation, as in the following:

Some programmers, after examining nothing more than my abstract table class made the complaint that "it is so huge that is surely must be breaking SRP". This tells me that they do not understand that the main criterion for splitting up large chunks of code is cohesion and not the ability to count. They fail to notice that this class is a component in the Business layer and does not contain any code which quite rightly belongs in either the Presentation layer or the Data Access layer. It is large because every public method is an instance of the Template Method Pattern and thus contains several steps which are spread across a mixture of invariant and variable "hook" methods which cover a myriad of possible scenarios.

In my own development framework, the basic structure of which is shown in Figure 1, when I create the Model components I go as far as creating a separate class for each database table which contains all the business rules for that table. Anything less would be not enough, and anything more would be too much. Having a class which is responsible for more than one table would be too much, and having multiple classes sharing the responsibilities for a single table would be too little..

Far too many programmers do not understand what "balanced" means. In this context it means halfway between "not enough" (less than you need) and "too much" (more than you need). If you examine a well-structured web application you should see code which constructs HTML documents, code which constructs and executes SQL queries, and code which sits between those two and which executes business rules. This clearly violates SRP as it does not achieve the correct levels of cohesion. A highly cohesive module is a collection of statements and data items that should be treated as a whole because they are so closely related. HTML logic is not related to business logic, so there should be separated. SQL logic is not related to business logic, so these two should be separated. This is why Uncle Bob wrote:

Keeping these concerns (GUI, business rules, database) separate is good design.

Putting all HTML logic into its own class is therefore a good idea. It starts to become a bad idea when you try to extract the code for each HTML tag and put it into a separate class as this would result in increased coupling and decreased readability.

Similarly taking the multiple business rules which may apply to an entity and putting them into separate classes, or the multiple different SQL queries which may be generated and putting them into separate classes would be a step too far.

In my own development infrastructure, which is shown in Figure 1, each component has a separate and distinct responsibility:

Note that only the Model classes in the Business layer are application-aware. They are stateful entity objects. All the Views, Controllers and DAOs are application-agnostic. They are stateless service objects. This architecture meets the criteria of "reason for change" because of the following:

Each component has a single responsibility which can be readily identified, either as an entity containing data, or a service which can be performed on that data, so when someone tells me that I have not achieved the "correct" separation of responsibilities please excuse me when I say that they are talking bullshit out of the wrong end of their alimentary canals.

The Open-Closed Principle (OCP) states that "software entities should be open for extension, but closed for modification". This is actually confusing as there are two different descriptions - the Meyer's Open-Closed Principle and Polymorphic Open-Closed Principle. The idea is that once completed, the implementation of a class can only be modified to correct errors; new or changed features will require that a different class be created. That class could reuse coding from the original class through inheritance.

While this may sound a "good" thing in principle, in practice it can quickly become a real PITA. My main application has over 450 database tables with a different class for each table. These classes are actually subclasses which are derived from a single abstract table class, and this abstract class is quite large as it contains all the standard code to deal with any operation that can be performed on any database table. This is done by making extensive use of the Template Method Pattern. The subclasses merely contain the specifics for an individual database table by utilising the hook methods. Over the years I have had to modify the abstract table class by changing the code within existing methods or adding new methods. If I followed this principle to the letter I would leave the original abstract class untouched and extend it into another abstract class, but then I would have to go through all my subclass definitions and extend them from the new abstract class so that they had access to the latest changes. I would then end up with a collection of abstract classes which had different behaviours, and each subclass would behave differently depending on which superclass it extended.

It may take time and skill to modify my single abstract table class without causing a problem in any of my 450 subclasses, but it is, in my humble opinion, far better than having to manage a collection of different abstract table classes and then to decide for each subclass from which one of those many alternatives it should inherit. I have never written code to follow this principle simply because I have never seen the benefit in doing so. By not following this principle I do not see any maintenance issues in my code, which means that I do not have any issues to solve by following this principle. On the other hand, if I do start to follow this principle I can foresee the appearance of a whole host of new issues. This principle is not the solution to any particular problem, it is simply the creator of a new set of problems. In my humble opinion this principle is totally worthless as the cost of following it would be greater than the cost of not following it.

According to Craig Larman in his article Protected Variation: The Importance of Being Closed (PDF) the OCP principle is essentially equivalent to the Protected Variation (PV) pattern: "Identify points of predicted variation and create a stable interface around them". OCP and PV are two expressions of the same principle - protection against change to the existing code and design at variation and evolution points - with minor differences in emphasis. This makes much more sense to me - identify some processing which may change over time and put that processing behind a stable interface, so that when the processing does actually change all you have to do is change the implementation which exists behind the interface and not all those places which call it. This is exactly what I have done with all my SQL generation as I have a separate classes for each of the mysql_*, mysqli_*, PostgreSQL, Oracle and SQL Server extensions. I can change one line in my config file which identifies which SQL class to load at runtime, and I can switch between one DBMS and another without having to change a line of code in any of my model classes.

In the same article he also makes this interesting observation:

We can prioritize our goals and strategies as follows:Low coupling and PV are just one set of mechanisms to achieve the goals of saving time, money, and so forth. Sometimes, the cost of speculative future proofing to achieve these goals outweighs the cost incurred by a simple, highly coupled "brittle" design that is reworked as necessary in response to true change pressures. That is, the cost of engineering protection at evolution points can be higher than reworking a simple design.

- We wish to save time and money, reduce the introduction of new defects, and reduce the pain and suffering inflicted on overworked developers.

- To achieve this, we design to minimize the impact of change.

- To minimize change impact, we design with the goal of low coupling.

- To design for low coupling, we design for PVs.

If the need for flexibility and PV is immediately applicable, then applying PV is justified. However, if you're using PV for speculative future proofing or reuse, then deciding which strategy to use is not as clear-cut. Novice developers tend toward brittle designs, and intermediates tend toward overly fancy and flexible generalized ones (in ways that never get used). Experts choose with insight - perhaps choosing a simple and brittle design whose cost of change is balanced against its likelihood. The journey is analogous to the well-known stanza from the Diamond Sutra:

Before practicing Zen, mountains were mountains and rivers were rivers.

While practicing Zen, mountains are no longer mountains and rivers are no longer rivers.

After realization, mountains are mountains and rivers are rivers again.

Even if I do create a core class and modify it directly instead of creating a new subclass, what problem does it cause (apart from offending someone's sensibilities)? If the effort of following this principle has enormous costs but little (or no) pay back, then would it really be worth it?

The following comment from "quotemstr" in Why bad scientific code beats code following "best practices" puts it another way:

It's much more expensive to deal with unnecessary abstractions than to add abstractions as necessary.

Like everything else associated with OO, this principle uses definitions which are extremely vague and open to enormous amounts of misinterpretation, as discussed in the following:

I recently came across a pair of articles written by Robert C. Martin (Uncle Bob) which aimed to offer different, and possibly better, interpretations of this principle. In this 2013 article he wrote:

What it means is that you should strive to get your code into a position such that, when behavior changes in expected ways, you don't have to make sweeping changes to all the modules of the system. Ideally, you will be able to add the new behavior by adding new code, and changing little or no old code.

I think that what he is saying here is that if you have developed a proper modular system you should be able to add new functionality by adding a new module and not by modifying an existing module. In this case my implementation of the 3-Tier Architecture achieves this as I can change or add components to the Presentation layer without having to make changes to the Business layer, and I can also change or add components to the Data Access layer without having to make changes to the Business layer. In my implementation of the MVC design pattern I can take the data from a Model and give it to a new View without having to change the Model. I can add new user transactions (tasks) without having to change any existing code. I can add new Model (database table) classes without having to change any existing code.

In this 2014 article he wrote:

You should be able to extend the behavior of a system without having to modify that system.

Think about that very carefully. If the behaviors of all the modules in your system could be extended, without modifying them, then you could add new features to that system without modifying any old code. The features would be added solely by writing new code.

There is a vast plethora of tools that can be easily extended without modifying or redeploying them. We extend them by writing plugins.

While it may be possible to write some software tools which can be extended by the use of plugins, it may not be possible or even practical for other types of software, such as large enterprise applications. It may be possible in some small areas, but not for the entire application. For example, my main enterprise application is an ERP package used by many different customers, and any package developer will know that although the core package will do most of what they want, each customer will want their own customisations. I have managed to deal with these customisations by turning them into plugins. Each customer has his own plugin directory, and each plugin can contain code which is either run instead of or as well as the core code. At runtime the core code looks for the existence of a plugin, and if one is found it is loaded and executed. This means that I can add, change or remove any plugin without touching the core code.

These later explanations, which were brought about by the author's realisation that what he had written earlier had been completely misunderstood, are more understandable because they identify a particular set of circumstances where the principle can be applied and can provide genuine benefits. This is much better than trying to apply the principle blindly in all circumstances, especially those circumstances where it would create more problems than it would actually solve.

The Liskov Substitution Principle (LSP) states that objects in a program should be replaceable with instances of their subtypes without altering the correctness of that program

. In geek-speak this is expressed as: If S is a subtype of T, then objects of type T in a program may be replaced with objects of type S without altering any of the desirable properties of that program

. This is supposed to prevent the problem where a subtype overrides methods in the supertype with different signatures, and these new signatures will not work in the supertype.

One problem I see with this principle is that it originated in languages where where each class also has a type, and it is possible to inherit from a class of type T and create a subclass of type S. In PHP classes do not have separate types, so this situation cannot exist. In some earlier and less sophisticated languages it is said that subtyping (implementation inheritance) provides polymorphism without code sharing while subclassing (class inheritance) provides code sharing without polymorphism

, but this artificial and ridiculous restriction does not exist in PHP, as discussed in Subclasses vs Subtypes. In my framweork I have used an abstract table class for several decades to provide both code sharing and polymorphism in my hundreds of concrete table classes.

This may be difficult to comprehend unless you have an example which violates this rule, and such an example can be found in the Circle-ellipse problem. This can be summarised as follows:

One possible solution would be for the subclass to implement the inapplicable method, but to either return a result of FALSE or to throw an exception. Even though this would circumvent the problem in practice, it would still technically be a violation of LSP, so you will always find someone somewhere who will argue against such a practical and pragmatic approach and insist that the software be rewritten so that it conforms to the principle in the "proper" manner.

Even if your code actually violates this principle, what would be the effect in the real world? If you try to invoke a non-existent method on an object then the program would abort, but as this error would make itself known in the system/QA testing it would be fixed before being released to the outside word. So this type of error would be easily detected and fixed, thus making it a non-problem.

But how does this rule fit in with my application where I have 450 table classes which all inherit from the same abstract class? Should I be expected to substitute the Product subclass with my Customer subclass and still have the application perform as expected?

The Liskov Substitution Principle is also closely related to the concept of Design by Contract (DbC) as it shares the following rules:

Unfortunately not all programming languages have the ability to support DbC, and PHP is not one of them due to the fact it is not statically typed and not compiled. This is discussed in Programming by contracts in PHP. Certain languages, like Eiffel, have direct support for preconditions and postconditions. You can actually declare them, and have the runtime system verify them for you.

There is also one other point to consider - if you only ever inherit from an abstract class then this principle does not apply as you cannot instantiate an abstract class into an object, therefore it is not possible to replace an instance of the superclass with an instance of a subclass. In my framework all my concrete table classes inherit from an abstract table class, so this principle is irrelevant. All I can do is replace an instance of a subclass with an instance of a different subclass. In this way I can replace any instance of a concrete subclass with an instance of any other concrete subclass and the method will be perfectly valid. This is precisely how I can inject any of my concrete table (Model) classes into any of my 45 reusable Controllers, and how I can inject any of my Model classes into my reusable View.

The Interface Segregation Principle (ISP) states that "no client should be forced to depend on interfaces it does not use". This means that very large interfaces should be split into smaller and more specific ones so that clients will only have to know about the methods that are of interest to them.

PHP4 did not support the keywords "interface" and "implements", and when they were introduced in PHP5 I struggled to see their purpose and their benefit. It was not until I came across Polymorphism and Inheritance are Independent of Each Other that I discovered that they were added to strictly typed languages to enable polymorphism without inheritance. This does not apply to PHP as it is dynamically typed, not strictly typed, and it is possible to provide polymorphism without inheritance *AND* without the use of an interface. How do I know this? Because I had already created a group of classes in my Data Access layer to handle a different DBMS, and each of these classes supports exactly the same set of method signatures without the use of either of the keywords "extends" or "implements". The use of interfaces in PHP is therefore redundant and a violation of YAGNI.

Furthermore, the use of interfaces is supposed to provide polymorphism, and polymorphism requires the implementation of the same method signatures in multiple classes. Anyone who defines an interface which is only ever used by a single class is therefore adding code to provide a facility which is never used. This is then another violation of YAGNI.

Note that this principle only applies if you use the keywords "interface" and "implements" in your code as in the following example:

interface iFoobar

{

public function method1(...);

public function method2(...);

public function method3(...);

public function method4(...);

}

class snafu implements iFoobar

{

private $vars = array();

public function method1(...)

{

.....

}

public function method2(...)

{

.....

}

public function method3(...)

{

.....

}

public function method4(...)

{

.....

}

}

Here you can see that the interface iFoobar identifies 4 method signatures, and as class snafu implements this interface it must also contain implementations for all 4 of those methods. A problem arises if a class only needs to implement a subset of those methods. For example, if it only needs to implement methods #1, #2 and #3 it still must implement #4 simply because it is defined in the interface.

The solution to this problem is to create smaller interfaces which contain combinations of methods which will always be used together. Using the above example this would mean having one interface containing methods #1, #2 and #3 and another containing method #4.

In the PHP language the use of the keywords "interface" and "implements" is entirely optional and unnecessary, and as I do not use them in my framework this principle is irrelevant. I prefer to use a single abstract table class which is inherited by every concrete table class as this provides more benefits and fewer problems, as discussed in What is better - an interface or an abstract class?

The Dependency Inversion Principle (DIP) states that "the programmer should depend upon abstractions and not depend upon concretions". This is pure gobbledygook to me! Another way of saying this is:

This to me is meaningless garbage and is totally confusing as it uses the terms "abstract" and "concrete" in ways which contradict how OO languages are supposed to be used. According to Designing Reusable Classes, which was published by Ralph E. Johnson & Brian Foote in 1988, the term "abstraction" should equate to the creation of an abstract class which is then inherited by any number of concrete classes, but this principle makes no mention of inheritance. The above article also talks about layers such as a Policy layer, a Mechanism layer and a Utility layer which are meaningless to me as I only deal with enterprise applications which consist of a Presentation layer, a Business layer and a Data Access layer. The usage of the word "interface" also tells me that this principle is useless to me as I don't use interfaces, as explained in the Interface Segregation Principle.

My understanding of OOP is as follows:

The DIP principle is actually concerned with a specific form of de-coupling of software modules. According to the definition of coupling the aim is to reduce coupling from high or tight to low or loose and not to eliminate it altogether as an application whose modules are completely de-coupled simply will not work. Coupling is also associated with Dependency. The modules in my application are as loosely coupled as it is possible to be, and any further reduction would make the code more complex, less readable and therefore less maintainable.

A more accurate description would be as follows:

Where an object C calls a method in object D then C is dependent on D as it cannot perform its function without consuming a service from object D.

Without Dependency Inversion then object C will identify and instantiate object D within itself.

With Dependency Inversion object D will be instantiated outside of object C and "injected" into it.

Note that in my own implementation I have places where object D is NOT instantiated outside of object C. Instead I inject the class name for D into object C and then instantiate it within object C. I can do this because I never have to alter the configuration of object D.

The only workable example of this principle which makes any sense to me is the "Copy" program which can be found in the following articles written by Robert C. Martin:

The proposed solution is to make the high level Copy module independent of its two low level reader and writer modules. This is done using Dependency Injection (DI) where the reader and writer modules are instantiated outside the Copy module and then injected into it just before it is told to perform the copy operation. In this way the Copy module does not know which devices it is dealing with with, nor does it care. Provided that they each have the relevant read and write methods then any device object will work. This means that new devices can be created and used with the Copy module without requiring any code changes or even any recompilations of that module.