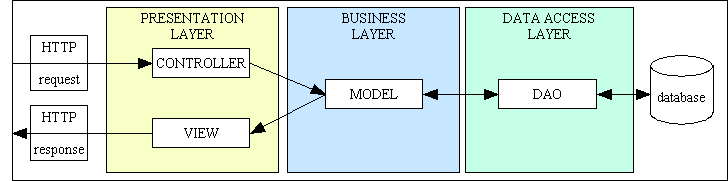

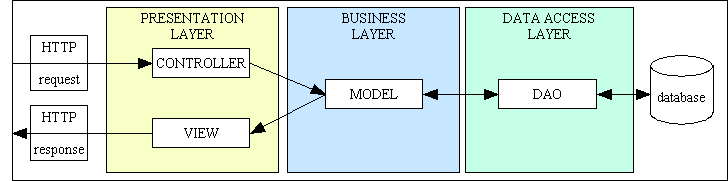

Figure 1 - MVC plus 3 Tier Architecture

I recently came across an article with the title Why PHP is not suitable for enterprise grade web applications, and as a developer who has has spent the last 40 years in writing such applications, with the last 20 years using PHP, I cannot agree with the author's opinion that PHP is not a suitable language for this task. But what exactly is an enterprise application? A good place to start is this description from Martin Fowler. An enterprise application can be characterised as follows - it has thousands of tasks (user transactions or use cases) and hundreds of database tables where each task performs one or more operations on one or more tables. It is a Transaction Processing System (TPS) rather that an Online Analytical Processing (OLAP) system.

In the first 20 years of my career, while working for various software houses, I wrote small bespoke applications for various organisations to deal with such things as:

All these follow a similar pattern in that they involve electronic forms at the front end, a database at the back end, and software in the middle to handle the transfer of data and the processing of business rules. Note that these were used by members of staff to administer the business on a daily basis. There were no customer-facing websites as the internet had yet to be invented, so Order Entry was a task carried out by members of staff using paper-based orders supplied by each customer. I used two compiled languages, starting with COBOL in the late 1970s then moving to UNIFACE in the early 1990s, and a mixture of hierarchical, network and relational databases. These were all desktop applications running on monochrome monitors which were connected to an in-house mini-computer, either a Data General Eclipse or a Hewlett Packard 3000 or 9000. It was not until the mid 1990s that we switched to Windows PCs, but these were still connected to in-house servers as the internet was still in its infancy.

As my experience grew I found that I had a knack for spotting repeating blocks of code which I could put into reusable libraries and thus increase my level of productivity. For one particular project in the mid-1980s the client asked for what turned out to be a Role Based Access Control (RBAC) system, so on the following weekend I designed a database to deal with all his requirements, and on the Monday I started writing the code to maintain the contents of that database. By the Friday of that week what turned out to be my first framework was up and running. The database had a USER table which identified those people who could access the system, a TRAN (short for TRANSACTION) table which identified the tasks (user transactions) that could be performed, a MENU table which provided pages of options which the user could view in order to find a particular transaction they wanted to run, and a TRAN-USER table which identified which Transactions were accessible by which User. This satisfied that particular client, and my solution was so successful that the framework and my library of reusable software became the company standard for all future projects. When the company started to use UNIFACE instead of COBOL I used the same database design to build a new version of the framework in that language.

For my last UNIFACE project in 1999 I was poached by a different software house as they didn't have enough developers with UNIFACE skills. Compuware had added some modules to the software to convert a compiled page into an HTML document so that it could function as a web application, but as I began learning about HTML on my home PC I realised that their approach was far from satisfactory, so I left them and looked for a more suitable language. I chose PHP as it was built specifically to link HTML pages to a relational database. I decided to teach myself this language in my own time and on my own PC before I looked for employment, and after building a small Sample Application as Proof of Concept I rebuilt my entire framework in PHP and MySQL. At the request of another software house I released this as open source, under the name RADICORE, in 2006. After being asked to build a small bespoke application by that software house I was later asked if I could design and build a reusable package that could be used by multiple customers instead of writing a separate bespoke version for each one. This I started in 2007 with the first prototype being available in 6 man-months, and it went live 6 months later after I had amended all the functions in their B2C website to access the new properly normalised database instead of the old one.

Because of my previous experience I knew that enterprise applications involved the movement of data into and out of a database, with lots of different pieces of information scattered across lots of tables. I knew that each user transaction performed one or more operations on one or more database tables, and I knew that each table, regardless of the data which it contained, was subject to the exactly the same four basic operations - Create, Read, Update and Delete (CRUD). All the sample PHP code which I had seen during my learning phase spat out fragments of HTML code during the execution of each script, but I did not like that idea. I decided to delay the construction of any HTML output until after the business/domain objects had finished their processing at which point I could extract all the application data, copy it to an XML document and transform it into an HTML document using an XSL stylesheet. In my first iteration I used a bespoke XSL stylesheet for each web page, but as I had already noticed repeating patterns in the layout of the screens, and repeating patterns of behaviour in the user transactions, I went through several iterations of refactoring until I created a library of just 12 reusable XSL stylesheets which can be used for any web page in the application. I also used the patterns of behaviour to create a library of reusable page controllers which perform the same set of operations in the same sequence but on a domain object whose identity is not known until runtime using Dependency Injection. This enabled me to produce a library of reusable Transaction Patterns which I use to generate the initial copies of each application component. I had already learned that the heart of a database applications is the database itself, so I always start with a solid database design, then build sets of user transactions which are appropriate for each table using this library of Transaction Patterns.

The RADICORE framework is actually a semi-complete application which allows you to create a complete system of integrated subsystems. Each subsystem has its own database, its own subdirectory for its files, and its own set of tasks on the MENU database. The framework itself consists of the following four subsystems:

After creating the database for a new subsystem the Data Dictionary is used to generate the components for that subsystem, and the RBAC system is used to run those components. There is no limit to the number of subsystems which can be added.

As explained in the previous section I spent 20 years writing numerous bespoke enterprise applications in both COBOL and UNIFACE, using frameworks which I designed and built myself, before I switched to using PHP in 2002. I then rebuilt a new version of those frameworks in PHP, which I called RADICORE, so that it could help me to build application components as well as run them. My original TRANSIX application, which was built using this framework, consisted of the following subsystems:

Each of these subsystem has its own directory structure for all its scripts, its own application database, and its own task entries in the framework's MENU database. My starting point for each of them was the database design in Len Silverston's Data Model Resource Book. After building each database and importing it into my Data Dictionary I could generate the class files for each database table followed by the various scripts for the tasks required to view and maintain the contents of those tables. Note that it took an average of one man-month to create a working prototype for each of those subsystems. Each table class file inherits all its standard code from an abstract table class, so all the developer has to do is insert custom processing into the relevant "hook" methods.

In 2014 I formed a partnership with Geoprise Technologies in order to market my ERP application to a wider audience under the name GM-X, and within the first year it had been sold to an aerospace company in Asia. The following subsystems were added over a period of time to increase the options available to future customers:

Note that this type of application is used only by members of staff to support the running of the organisation's business activities. It does not include such things as an e-commerce website used by members of the public as that is a totally different entity even though it may have shared access to some of the databases. An e-commerce website is usually limited to just 2 activities - show products and accept orders - whereas the back-office enterprise application used by members of staff does much, much more. For example GM-X currently has over 4,000 tasks covering many aspects of the business where user access is limited by the framework's RBAC system.

Below are my responses to the criticisms of PHP in that article.

If you have performance or scalability issues with your application then you should not start by instantly blaming the language. You first have to identify the bottleneck before you can look for possible solutions. If your application is too slow perhaps it could be a hardware issue. If you are running on an out-dated server with small amounts of memory yet the workload has increased then blaming the software would be plain wrong. It would be like blaming your 2-ton pickup truck because it cannot deal with 10-ton loads. In recent years more and more businesses have migrated to cloud servers instead of in-house servers as it then becomes much easier to spread the load across multiple servers should the load become too much for a single server. It may also be beneficial to host the application and the database on separate servers. The cost of upgrading the hardware is more often than not much more cost-effective than rewriting the software. If you have a large application written in PHP which you think would run faster if rewritten in language X you should start by asking the following questions:

A common situation I have encountered with software which performs badly is that it is often not the language in which it was written which causes the problem but the way in which it was written. Executing thousands of instructions where only hundreds are necessary will always be a waste of processor cycles. A badly structured program will always perform worse than a well-structured program, and that will always be the fault of the programmer and not the language. In my early days I overheard a programmer complain that it was impossible to write structured code in COBOL, but what he failed to realise was that while the language made no attempt to enforce a particular structure it did provide the tools to allow you to implement a structure of your choosing. The failure was his inability to design a suitable structure using the features that the language provided. The first piece of code I saw was badly structured and difficult to follow, but the second was written by someone with more ability and was a real eye-opener. I used his ideas as the basis for all my future programs and, following a course in Jackson Structured Programming, I enhanced these ideas until they culminated in my COBOL Programming Standards which I wrote while being a team leader in a software house.

I have encountered quite a few examples of code written by others which follows so-called "best practices", popular principles and oodles of design patterns, and quite frankly I am shocked at the amount of code which is used to complete relatively simple tasks. While each of these principles was probably written with good intentions in mind the biggest problem is that each should only be implemented in the appropriate circumstances, but the vast majority of programmers do not seem to have the mental capacity to evaluate what "when appropriate" actually means. They also seem to think that there is a single set of best practices which all programmers should be obliged to follow, but this is absolute nonsense. Each team should be free to create and follow whatever practices that they deem as being best for the type of application which they write as well as the programming language which they use. Standards devised for a compiled and strictly-typed languaged are not appropriate for an interpreted and dynamically-typed language. Standards devised for programs which are not primarily concerned with using HTML forms to move data in and out of a relational database are not appropriate for enterprise applications.

My critics, of whom there are many, are very fond of telling me at every possible opportunity that my code must be bad simply because it doesn't follow the Single Responsibility Principle (SRP). My argument is that my code does follow SRP, just not their interpretation. When the principle was first published its criterion was stated as "reason for change", but as this was so vague and non-specific I didn't know how to go about implementing it, so I decided to ignore it. This author himself had to write some follow-up articles to describe what he really meant using terminology that wasn't ambiguous, and it turned out that what he was describing was already known as the 3-Tier Architecture which I had already implemented after discovering it during my work with UNIFACE. SRP, which is exactly the same as Separation of Concerns (SoC), was supposed to be based on the idea of cohesion which was first described by Tom DeMarco in 1979 and Meilir Page-Jones in 1988. Cohesion is based on functionality where all those functions which are closely related should be grouped together within the same module. Presentation logic is different from Business logic is different from Database logic, which is why they should be split into three separate modules. In my own implementation I split the presentation layer into two by putting the generation of all HTML forms into a separate component thus producing a version of the Model-View-Controller (MVC) design pattern, as shown in the following diagram:

Figure 1 - MVC plus 3 Tier Architecture

However, Uncle Bob threw a spanner in the works when he published One Thing: Extract till you Drop in which he advocated that you should extract all the different actions out of a function or method until it is physically impossible to extract anything else. This can be taken to ridiculous lengths by splitting code so much that you end up with methods that contain a single line of code. Even worse is when you put each of those tiny methods into a separate class. This is contrary to the basic idea of object-oriented design in which domain models are supposed to combine both behaviour and state. If you have a class with a single method and no state then it is no different from a procedural function. Every line of code which is part of the same responsibility should exist in the same object. If you take a single cohesive component and break it down into lots of tiny fragments you are actually making the code less readable and more difficult to maintain. If I have a method which contains 50 lines of code (LoC) which are executed sequentially then I find it easier to read those 50 lines in a single place than have them scattered across 50 separate classes. The idea that a method containing 50 LoC is more difficult to read is complete and utter bunkum. Have you ever tried to assemble in your mind a sequence of 50 instructions where each of those instructions is in a separate place? Not only would this be more difficult to read, it would also be slower to run. It would also be more difficult to write as you then have to invent 50 different method names. If you have 50 lines of code spread across 50 classes, and each class is in a separate file, then that would be 50 files which have to be located and loaded each time. If each of these classes then implements a different interface which in turn is defined in a separate file then that would double the number of files. Searching for and then loading 100 files just to execute 50 lines of code does not sound very efficient to me, so excuse me if I prefer to do it the old fashioned way.

This proliferation of bad code is exacerbated by the inappropriate implementation of other design patterns, such as:

Design patterns are supposed to represent solutions to common problems, but implementing a solution when you don't have that problem can never be a good idea. This is a quote from Erich Gamma, one of the authors of the GoF book, in the article How to use Design Patterns:

Do not start immediately throwing patterns into a design, but use them as you go and understand more of the problem. Because of this I really like to use patterns after the fact, refactoring to patterns.

One comment I saw in a news group just after patterns started to become more popular was someone claiming that in a particular program they tried to use all 23 GoF patterns. They said they had failed, because they were only able to use 20. They hoped the client would call them again to come back again so maybe they could squeeze in the other 3.

Trying to use all the patterns is a bad thing, because you will end up with synthetic designs - speculative designs that have flexibility that no one needs. These days software is too complex. We can't afford to speculate what else it should do. We need to really focus on what it needs. That's why I like refactoring to patterns. People should learn that when they have a particular kind of problem or code smell, as people call it these days, they can go to their patterns toolbox to find a solution.

In his article the author states the following:

PHP is more suitable and shines where comparatively smaller web sites and applications where number of parallel requests are low, and definitely not for comparatively larger applications. When it comes to applications it requires faster execution and more enterprise features like queue based, asynchronous, multi-threaded processing I would suggest PHP is not suitable for it. The limitations of line by line execution becomes a problem when such features are in need for scalability and performance reasons.

In the 40 years I have spent in writing administrative applications for enterprises, which excludes customer-facing websites, I have never encountered any requirement which could only be satisfied by queue based, asynchronous, or multi-threaded processing. Writing software which has electronic forms at the front end, which nowadays is predominantly HTML, a relational database at the back end, and program code in the middle to handle the data transfer and the processing of business rules, is so commonplace that it is practically boring. There is no need for cutting-edge, bleeding-edge technological advances when what the customers want is more of the same but cheaper, faster and bigger. Faster and bigger can be provided by the latest hardware with faster processors and bigger storage such as Solid State Drives (SSD). When it comes to the performance of the software it would be extremely short-sighted to say that it depends entirely on the choice of programming language. Well-written code will always perform better and be more maintainable that badly-written code. Well written code can be maintained, enhanced and expanded more easily than badly written code. It is therefore the choice of programmer and not the programming language which is more important.

Definitely who needs such features? There are handy for architects to solve performance issues and scalability concerns. Activator patterns where queues are heavily used for order processing applications for eg. where every single second a whole lot of perhaps millions worth transactions are processed.

Organisations who are looking for a new enterprise application come in all shapes and sizes - small, medium, large and extra large. Some are looking to replace their old systems which can no longer cope with their latest business requirements and increased workloads while others have only been trading for a short while and wish to implement an integrated application rather than pass around spreadsheets from one person to another. The percentage of organisations which need to process a million transactions per second will be extremely small, so they will obviously have a big budget that can support the latest and most expensive hardware. Note that you don't have to change the software to switch to a faster processor or a bigger storage system. If your application has more requests than can be handled by a single server then you buy multiple servers with a load balancer at the front, and it will be the load balancer which will be responsible for balancing the load.

Node and Javascript stack I would say is not really comparable with PHP as they are for different purpose.

The author has actually said something with which I agree. A modern enterprise application has to employ a different set of domain-specific languages to deal with different aspects of the application. In my own application I use the following:

I have never encountered a requirement that would benefit from a different technology such as a NoSQL database, queue processing or asynchronous processing, but if I did I would satisfy that particular requirement with the most relevant technology and leave the rest of the application as standard.

When it comes to using Node.js and javascript code I have rarely found a use for these in the back office enterprise application which is used only by members of staff. If there is a front office e-commerce website which is open to the public then it is a separate application. It will have a different URL which probably means that it runs on a different server. This also allows it to be written in a different language, which is what I have encountered in the past.

In his article the author states the following:

When you include a file in PHP, it is just a direct file load and it does not look other file already loaded if you have not used require_once kind, but that too is restricted in terms of global inclusion.

This such bad English I have great difficulty in understanding what he means. I know the difference between require() and require_once() as I have used them both many times in the past 20 years.

I also know that PHP, because it runs on the server and can receive requests from multiple clients, uses a shared-nothing architecture which means that each request starts off with absolutely no memory of any previous request from the same client, so it is like a blank page. It may need to service hundreds of requests from hundreds of different clients before it receives a follow-up request from a previous client, but it simply does not have the mechanism to link a request from a client device with any previous request from the same client device. This is not a limitation of the PHP language, it is a limitation of the web server under which PHP is being run. When each request is received it will be treated as a brand new request. It will need to reload all the files it needs one by one, but it can use the unique session_id provided by that client to reload any data that was saved by the previous request that was processed for that client.

Code running on the client device does not start with a blank page as it is running under a web browser, not a web server. Each web page on that browser is capable of storing in the browser's cache any files which need to be downloaded from the server, and these cached files are made available to any other web pages. The idea that all the files on the server can be uploaded to the client device is completely preposterous. A large enterprise application can take up many gigabytes of files on the server, so uploading copies of all those files onto every client device would be a monumental task. How would the client recognise that a file on the server had been changed and needed to be reloaded? What about that code which can only be processed on the server, such as access the database?

The idea that page loading with PHP is slow is nothing to do with the language but how many files are required to render each page, with fewer being better, and that is the responsibility of the programmer. I was once asked to debug what should have been a small problem with somebody else's code which meant installing it on my PC and running it with the debugger in my IDE turned on. Each time it required a new file it loaded that file into my IDE's window, then stepped forward for a few lines before it found it necessary to load another file. After about 30 minutes the script terminated, and I counted over 100 different files loaded into my IDE. It was showing the error, but I hadn't the faintest idea where that error was generated, nor did I know which of those 100 scripts was the likely culprit. I gave up in disgust and immediately purged all that useless code from my system. Any programmer who thinks it's a good idea to use 100 scripts just to load a web page has a lot to learn.

I remember the struggle I had to optimise a PHP application to have a binary cash enabled to avoid repeated interpretation.

Welcome to the world of interpreted rather than compiled languages. You pays your money and you takes your choice. The benefit of a language such as PHP is speed of development, therefore lower costs of development, not speed of execution, and that is much more important to most customers. It would take longer and cost more to write it in a compiled language, so many customers would prefer to have their application developed sooner and spend any left-over money on faster hardware. There is also the option to employ a third-party PHP accelerator which will compile each script into bytecode so that it can reuse the same bytecode without compiling the same script again and again.

NodeJS and Javascript helps to manage code re-usable better and thus easier to maintain as well. PHP uses more server side include than delegation, which I would consider as a defective and vulnerable approach when high security concerns like script injection.

This statement is misleading. No programming language produces delegation "out of the box". That is a design pattern which requires the programmer to write the relevant code. If an application does not have any instances of this pattern then how can that be the fault of the language? If a programmer writes code which makes it vulnerable to script injection then how can that be the fault of the language?

Whether code is maintainable or not is not determined by the programming language, it is determined by the skill of the programmer. There is no language in the world which can prevent a bad programmer from writing bad code.

I am still maintaining the code base which I first wrote in 2002, and it has grown from a framework for writing enterprise applications into an ERP application in its own right. This application contains over 20 subsystems, 400 database tables and 4,000 tasks. In the past couple of years I have upgraded all the HTML forms to be responsive, which took one man-month. I have also added customisable blockchain support to enable collaborating partners to exchange data in a very secure manner, which again took one man-month. I could not have done that unless the code was built to high standards.

Data Streaming can be defined as follows:

Also known as stream processing or event streaming, data streaming is the continuous flow of data as it is generated, enabling real-time processing and analysis for immediate insights.

Data Streaming is not a function of the language, it is a software service that needs to be written by a programmer. You can either write the code yourself, or use something already developed from a third-party provider.

As you can see data streaming is used purely for analytical purposes, for analysing streams of data, whereas enterprise applications are primarily developed for Transaction Processing System (TPS). This type of system produces data which can be fed into an Online Analytical Processing (OLAP) system which then analyze that data looking for patterns and statistics. The two systems are entirely separate and need not be run on the same hardware or even built with the same language.

TPS systems are usually have a Relational Database at the back end while OLAP systems often use a Functional Database.

This argument is irrelevant. Enterprise applications are comprised of large numbers of transactions whose sole purpose is use the standard CRUD operations on large numbers of different database tables. If you read Functional Programming VS Object Oriented Programming (OOP) Which is better...? you should see this comparison:

Object-oriented languages are good when you have a fixed set of operations on things, and as your code evolves, you primarily add new things. This can be accomplished by adding new classes which implement existing methods, and the existing classes are left alone.

Functional languages are good when you have a fixed set of things, and as your code evolves, you primarily add new operations on existing things. This can be accomplished by adding new functions which compute with existing data types, and the existing functions are left alone.

An enterprise application is comprised of thousands of tasks each of which performs one or more operations on one or more database tables, and while the number of database tables may grow, and the number of tasks may grow, the number of operations will remain fixed at Create, Read, Update and Delete. These characteristics therefore make it a perfect match for the Object Oriented paradigm.

So what? I can't see why this is a problem for enterprise applications as their primary purpose is to ensure the integrity of the data. This means that each I/O operation must be completed before the next one is allowed to start. This is normally achieved by using an ACID-compliant database. However, within web pages which are run in the client's browser and which are comprised of HTML and optional javascript it is quite possible to speed up processing in the web page by using whatever non-blocking I/O techniques which are available. Whatever javascript code is run on the client device is totally separate from any PHP code which is run on the server.

In his article the author states the following:

Every page request goes through specific stages, making PHP slower. All client requests in the case of PHP go through several stages. First, they arrive at the web server. After being processed by the PHP interpreter, they are transferred to the front end. In the case of NodeJS, this chain is significantly shorter and is controlled by the framework in which the application is written.

By saying that page requests are transferred to the front end I can only assume that he is taking about the use of a Front Controller. Not everybody uses such such an antiquated idea. I certainly don't, as documented in Why don't you use a Front Controller? I prefer to follow Rasmus Lerdorf's advice who, in The no-framework PHP MVC framework, said the following:

Just make sure you avoid the temptation of creating a single monolithic controller. A web application by its very nature is a series of small discrete requests. If you send all of your requests through a single controller on a single machine you have just defeated this very important architecture. Discreteness gives you scalability and modularity. You can break large problems up into a series of very small and modular solutions and you can deploy these across as many servers as you like.

That is why I use the Apache web server as my front controller. My enterprise application has over 4,000 user transactions and each URL points to the script on the file system which provides that particular user transaction. That script is small and simple, as in the following example:

<?php $table_id = "person"; // identify the Model $screen = 'person.detail.screen.inc'; // identify the View require 'std.enquire1.inc'; // activate the Controller ?>

As you should be able to see it only accesses those scripts which are required to complete that particular transaction. No more, no less.

So what? Using queues for transaction processing went the way of the Dodo when batch processing was replaced by online processing. Today's users entering data via a computer expect their transaction to be processed NOW and not added to a queue for processing later.

This statement is W-A-A-Y out of date. There has been JSON support in PHP since 2006 as a PECL extension, but it has been part of PHP core since version 8.0.

In his article the author states the following:

Expansion capabilities such as cluster of processors at runtime level is not possible using PHP. Node runtime inherently supports auto expansion of runtime processors with associated/built-in mechanisms, which are essential for load balancing and tuning.

Clustering is not the responsibility of the language, it is provided by additional third-party software. I developed and maintained my ERP application on a simple laptop, yet the same code has also been deployed in the cloud on an Amazon Elastic Load Balancer. This means that under high use the load balancer can automatically fire up additional application servers to share the load. Easy Peasy Lemon Squeezy.

This again is not the responsibility of the language. It can be provided by third-party software such an IDE that supports debugging and profiling.

In his article the author states the following:

PHP doesn't separate views and business logic as NodeJS/MEAN Stack combination do. PHP views and server code is written same way, as a result, the code maintainability and scalability is poor, and it's often hard to introduce new functions and thus difficult in managing large code bases.

Separation of Concerns (SoC), which is also known as the Single Responsibility Principle (SRP) is not a function of the language, it is a function of the programmer. A programmer can put all his code in a single monolithic object, or he can use the principle of cohesion to divide the code into logically related units such as that shown in Figure 1 where you can clearly see that I have separated out the logic for Models, Views, Controllers and Data Access Objects into different components. The language did not do that for me, but it did provide the means for me to implement that structure with relative ease.

Like any good language, PHP does not force the programmer to use a particular structure, instead it allows him to use whatever structure his mind can imagine. In my programming career I have progressed from monolithic single-tier systems, through 2-tier and then 3-tier systems, so experience has taught me which architecture is best when it comes to designing and building enterprise applications.

In his article the author states the following:

NodeJS comes with few hard dependencies, rules and guidelines, which leaves the room for freedom and creativity in developing your applications. Being an un-opinionated framework, NodeJS does not impose strict conventions allowing developers to select the best architecture, design patterns, modules and features for your next project.

What hard dependencies does PHP have? What rules does PHP have which prevent you from writing cost-effective software? As for guidelines, they are not rules which must be followed, they are merely suggestions which you can either follow or ignore as you see fit. I have seen many "suggestions" from many programmers, and I choose to ignore 99% of them, especially the PHP Standards Recommendations from the Framework Interoperability Group (PHP-FIG). I have created an architecture which works best for me, I use only those design patterns which make sense to me, I follow the rules of modular/structured programming that have proved beneficial to me, and I use only those features of the language which help me achieve my objectives in the simplest and therefore the most maintainable way. My philosophy has always been to achieve complex tasks using simple code, not to achieve simple tasks using complex code.

The author's complaints seem only to apply to enterprise applications which deal with millions of transactions per second and which would require advanced features such as queue based, asynchronous, multi-threaded processing. In my experience of building enterprise applications for the last 20 years with PHP, and 20 years before that with other languages, I have never encountered an application of that size. The vast majority of organisations I have encountered have transaction volumes which can be counted in the hundreds or even thousands per day, but nowhere near millions per second, and it is those who could use an application written in pure PHP without any performance issues whatsoever. Claiming that PHP is no good for any size of enterprise application is nothing less than a terminological inexactitude of gargantuan proportions.

Claiming that PHP is no good for code reusability and maintenance is also erroneous as those factors are the sole responsibility of the programmer. There are numerous features within PHP which enable programmers to produce code which is well-structured, cost-effective, reusable and maintainable, but it is a fact of life that only good programmers are capable of producing good code. Bad programmers will continue to produce bad code irrespective of which language they use.

Here endeth the lesson. Don't applaud, just throw money.